Background: On April 30, 2025, the court involved in the Epic v. Apple lawsuit issued a ruling that Apple was blatantly and deliberately acting against their previous injunction, lying under oath, and doing everything to undermine compliance and the spirit of the previous ruling. I ran through the full ruling in threads on Mastodon and Bluesky if you’d like more background.

The main points the judge made:

- Apple picked a 27% commission vs their 30% App Store fee to prevent developers from being able to choose to leave in practice (because 3% is roughly every credit card processor’s fee)

- Apple required developers to give them commissions on ALL sales for 7 days after sending a user to their website

- Apple designed the payment link-out button to look like a small text link to make it hard to see and less appealing to tap

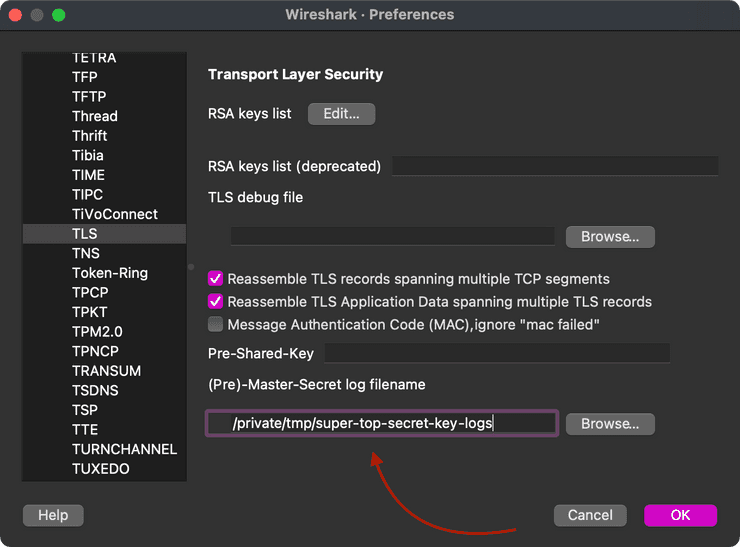

- Apple only allowed developers to direct to a static URL, which couldn’t include any account-specific credentials, making it less likely that people would complete the process out of the app

- Apple designed a warning screen to “scare” users away from clicking the link-out button, with Apple’s designers laughing about how to make it more hostile (the ruling calls this the “scare screen”)

- Apple manufactured a string of evidence to try to convince a court that the only inevitable outcome was the one that didn’t require Apple to give up any money or control

- Apple improperly put hundreds of thousands of documents under privilege, slowing the legal process by months

The ruling is directed at Apple as a whole, but decisions are made by people, and the ruling made it very clear who was involved: Apple’s upper level, going all the way to CEO. But since the framing is around the actions the company took, it’s hard to know who did what. So let’s take a look at which executives were involved in which decisions.

- Tim Cook was the tie-breaking vote on whether there should be a commission at all, signing off on 27%. He approved the restrictions on payment links and their hostile design to only show as a small text link, rather than a button, and to only allow a static URL which would stymie passing along a cookie. And he worked on refining the copy of the scare screen. As the CEO, he is of course ultimately responsible for everything, but the court has determined that he actively worked on these.

- Luca Maestri as CFO was driving all of these discussions from a revenue angle. He was the main advocate for a 27% commission, even though others were suggesting lower commissions. He also contributed to the scare screen design.

- Alex Roman is a VP of finance, and he apparently lied under oath to the court, earning a referral to a prosecutor for him and Apple for criminal contempt. He testified that Apple didn’t do any real research on what developers would have to pay if they went on their own, an obvious and provable lie. And he testified that Apple didn’t know what the fee would be, when his boss decided it 6 months earlier. What a guy.

- Phil Schiller appears to be the only executive at Apple who tried to take the L and do the reasonable thing. He was the lone advocate (at least for the handful of people the ruling mentions by name) for a no-commission model and for just complying with the injunction. But he was also involved with the usage of the placement and design of the small text link for directing users away from the app, and for contributing to the anti-patterns of the scare screen. He is at least not responsible for the 27% commission, but he is not innocent here either.

Three things stick out to me.

First: the only considerations Apple executives made were for revenue and control. At no point did the user’s experience enter the picture, except as a hand-wavey gesture towards “safety” when leaving an app. Apple gave up treating developers with decency a decade ago, knowing they have them by the throat and can make them do whatever they want. Legal compliance was asked to be as close to the line as possible, and to stick a toe over if their arrogance made them believe they could get away with it. Beyond that, in every decision, money and power were what they chose, and the ruling includes evidence that this is how they thought when no one was looking.

Second: the hubris is overwhelming. Apple could’ve chosen a number that was similarly high, but not SO high that would’ve made it look obvious. They could’ve allowed for buttons, or toned down the ridiculous scare screen, or cut down their 7-day commission carryover, or any number of things. But they made it so easy to see their intentions, and they left a paper trail. They really could not have more thoroughly engineered a situation that would make them look as deliberately anticompetitive as they have been here.

Third, and arguably most important: the rot went all the way to the top. Tim Cook signed off on all of this, and but for Schiller’s protest over the 27% commission, so did the executives involved. This wasn’t something that was caught up in a committee and escaped the vision of the CEO. He was giving the thumbs up on all of it. If you think any of this is as offensive as the judge did, there are many people to point fingers at, but they all directly lead back to Team Cook.

I resent that Apple has reached this point. If they did it here, with the eyes of a court on them, they are probably thinking this everywhere. Their efforts in the European Union to defy regulators around the Digital Markets Act are probably working along the same lines. If they need to back away from something to make a buck, we have evidence to point to which suggests which decision they would take.

The Apple that would’ve chosen fighting for the customer is gone. It will not be back when the sickness is so deep. I hope that we soon see the end of the era of Tim Cook.